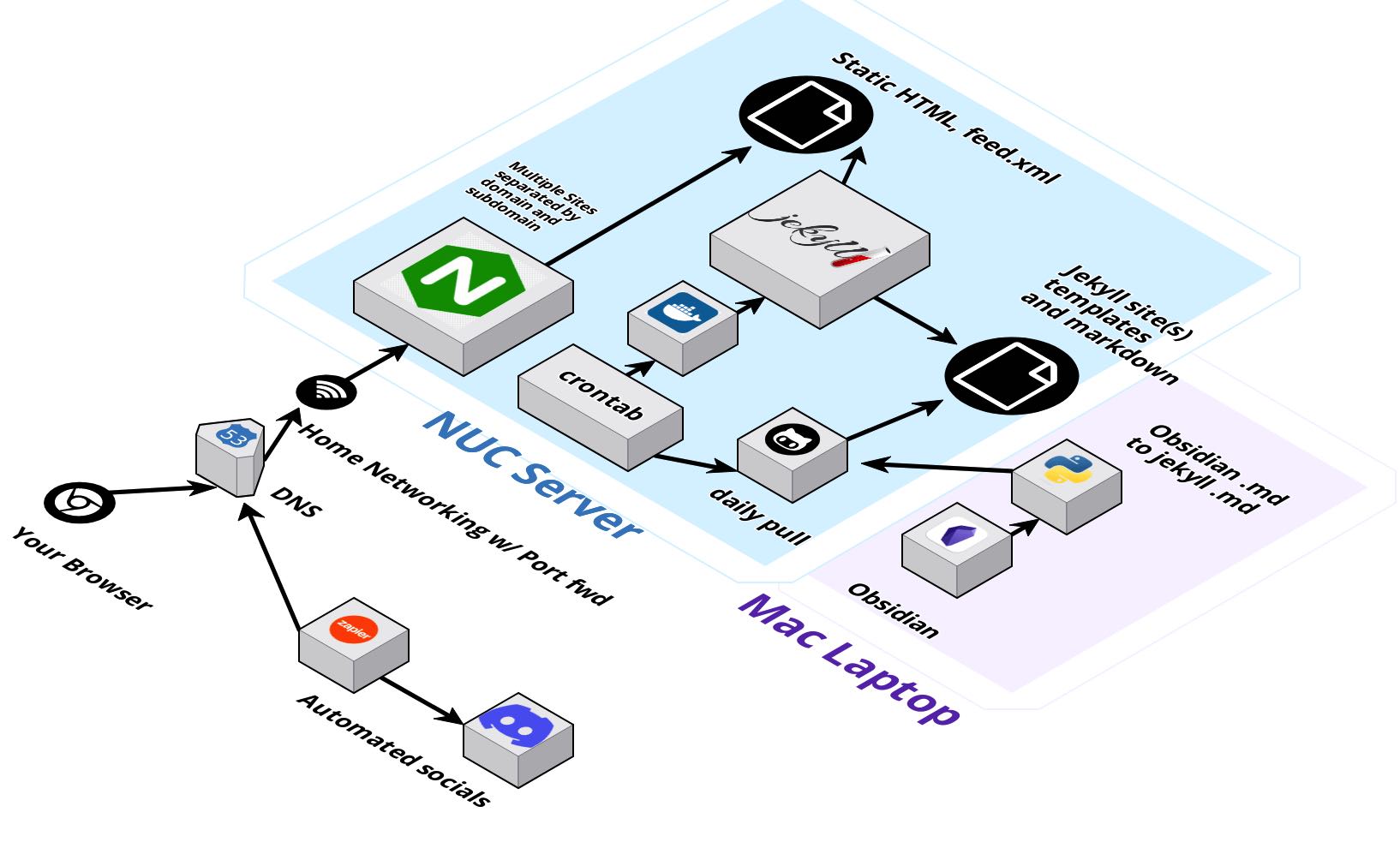

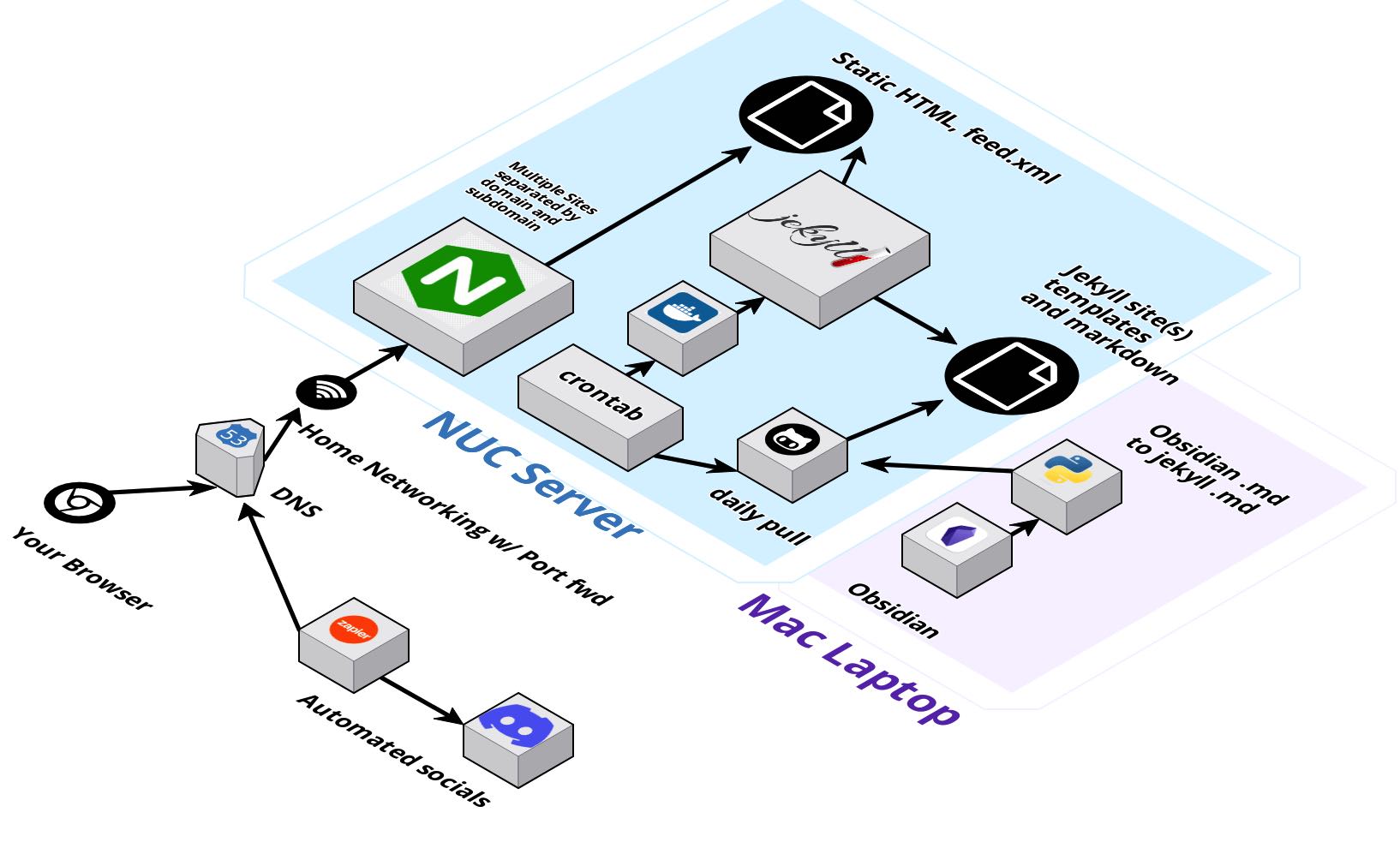

Self-Hosting with Jekyll and Obsidian

For the last several years, I’ve pivoted my personal time to relearning art tools like Blender, Photoshop, and After Effects. In the last few months though, my personal project time has pivoted back towards dev and moved my personal web hosting off of SaaS services and back to self-managed software.

Up to this point, I’ve kept this and a few other blogs going through the static website generator Jekyll, which converts markdown into HTML to be hosted on Github Pages. Aside from domain registration, this is free, fast, and easy. Static sites are more secure and require less maintenance and attention than heavier tools that have data stores and vulnerable compute.

SaaS is great, but having my own hosting gives me more opportunity to exercise my front-end and back-end skills. So it’s time for a change.

Goals

- Keep my sysadmin skills sharp

- Run a 24-7 lab that generates telemetry, even if it is small.

- Host static sites locally

- Utilize non-proprietary formats like markdown for longer-term archival preservation of content and separation

- Utilize higher efficiency markdown tool Obisidian than writing directly in Visual Studio Code

nginx multisite redirect

After Route53 A records move DNS traffic to my server, the first real step in the chain is a fail-through nginx configuration that makes use of 301 redirects to coax all HTTP port 80 traffic towards HTTPS port 443.

I’m hosting 4 sites. This one, and 3 sites on a second domain.

# HTTPS redirect for no subdomain

server {

listen 80;

listen [::]:80;

server_name front2backdev.com domain2.com;

location /nginx_status {

stub_status on;

access_log off;

allow 127.0.0.1;

allow ::1;

deny all;

}

return 301 https://www.$host$request_uri;

}

# HTTPS redirect for www subdomains

server {

listen 80;

listen [::]:80;

server_name www.front2backdev.com www.domain2.com subdomain1.domain2.com subdomain2.domain2.com;

location /nginx_status {

stub_status on;

access_log off;

allow 127.0.0.1;

allow ::1;

deny all;

}

return 301 https://$host$request_uri;

}

letsencrypt and certutil

The following call on the server creates publicly trusted SSL certs for free which are easy to keep refreshed

sudo certbot --nginx -d front2backdev.com -d www.front2backdev.com

Which gets used in the nginx config. The /nginx_status phrase is part of synthetic uptime testing calls from Elastic, which I’ll get into later

server {

listen 443 ssl http2;

listen [::]:443 ssl http2;

server_name www.front2backdev.com front2backdev.com;

ssl_certificate "/etc/letsencrypt/live/front2backdev.com/fullchain.pem";

ssl_certificate_key "/etc/letsencrypt/live/front2backdev.com/privkey.pem";

location /nginx_status {

stub_status on;

access_log off;

allow 127.0.0.1;

allow ::1;

deny all;

}

location / {

root /LOCATION_ON_DRIVE/_site;

}

}

Keeping the certs refreshed

No one likes finding out their SSL certs have gotten old, especially modern browsers. The following line in the root user’s crontab solves this gracefully.

sudo crontab -e

43 6 * * * certbot renew --post-hook "systemctl reload nginx"

Daily Static Generation

The source code for the side is a jekyll site built in markdown. I’ve been very happy with that for many years now. I’ve containerized and how that is generated. This daily shell script runs the show

#!/bin/sh

## install with crontab -e

## 40 6 * * * /home/dave/dev/jekyll/dailyrefresh.sh

echo "starting daily refresh" >>/home/dave/dailycronlog.txt

date >>/home/dave/dailycronlog.txt

cd /home/dave/dev/jekyll/SITE_GITHUB_REPO_1

git pull >>/home/dave/dailycronlog.txt 2>&1

cd /home/dave/dev/jekyll/SITE_GITHUB_REPO_2

git pull >>/home/dave/dailycronlog.txt 2>&1

cd /home/dave/dev/jekyll/SITE_GITHUB_REPO_3

git pull >>/home/dave/dailycronlog.txt 2>&1

cd /home/dave/dev/jekyll

./run-SITE1.sh >>/home/dave/dailycronlog.txt 2>&1

./run-SITE2.sh >>/home/dave/dailycronlog.txt 2>&1

./run-SITE3.sh >>/home/dave/dailycronlog.txt 2>&1

A run-SITE.sh build run works like this

export JEKYLL_VERSION=4.2.2

docker run --rm \

-p 4000:4000 \

-e TZ=America/New_York \

--volume="$PWD/SITE_GITHUB_REPO_1:/srv/jekyll" \

--volume="$PWD/bundle-SITE1:/usr/local/bundle" \

-i jekyll/jekyll:$JEKYLL_VERSION \

jekyll build

As long as my github repo holds the latest markdown changes, the whole site gets regenerated daily, which allows jekyll plugins that compare publish dates to take effect. Now I don’t have to live publish through pull requests in github. I can future schedule posts.

Bridging RSS and Social Feeds

Using the free tier of Zapier, I poll my site’s RSS feed and push to a discord channel where I have links for my friends who follow along with one of those personal sites.

Obsidian to Jekyll

using some code from this example - https://github.com/adriansteffan/obsidian-to-jekyll

and adding a few more regular expressions to rewrite img src urls to where I have Obsidian image attachments uploaded into my jekyll projects I can do a manual push of an Obsidian vault into a jekyll projects.

I’m not great at regular expressions but found ChatGPT helped me write all the various changes to ruby and python necessary for this project. It’s a brave new world.

import sys

import os

import re

from pathlib import Path

def cleanup_content(content, custom_replaces):

if custom_replaces:

if "Zotero Links: [Local]" in content:

content = re.sub(r"(?<=^- ).*(?=$)", "Metadata:", content, 1, re.MULTILINE) # BetterBibtex Ref

content = re.sub(r"^ *- Zotero Links: \[Local\].*$", "", content, 0, re.MULTILINE) # Zotero Links

content = re.sub(r'(?<=\[\[)[^[|]*\|(?=[^]]*\]\])', '', content) # aliases

content = re.sub(r"&(?=[^\]\[]*\]\])", "and", content) # & to and

content = re.sub(r"!\[\]\((.*?)\)", r"", content, flags=re.MULTILINE)

content = re.sub(r"!\[\[(.*?)\]\]", r"![[/assets/wiki/\1]]", content, flags=re.MULTILINE)

return content

def process_directory(input_dir, output_dir, visibility_dict, custom_replaces):

print(input_dir)

# private by default

directory_public = False

# inherit parent visibility

parent_dir = str(Path(input_dir).parent.absolute())

if parent_dir in visibility_dict:

directory_public = visibility_dict[parent_dir]

# check for dotfile to overwrite directory visibility

if os.path.isfile(os.path.join(input_dir, ".public")) or os.path.isfile(os.path.join(input_dir, ".public.md")):

directory_public = True

if os.path.isfile(os.path.join(input_dir, ".private")) or os.path.isfile(os.path.join(input_dir, ".private.md")):

directory_public = False

print( f"Determination of visibility: {directory_public}")

visibility_dict[input_dir] = directory_public

for file in os.listdir(input_dir):

curr_file_path = os.path.join(input_dir, file)

if os.path.isdir(curr_file_path):

process_directory(curr_file_path, output_dir, visibility_dict, custom_replaces)

continue

if not file.endswith(".md") or file.startswith("."):

continue

with open(curr_file_path, "r") as f:

content = f.read().lstrip().replace(" ", " ")

title_clean = file[:-3].replace("&", "and")

if content.startswith("---\n") and len(content.split("---\n")) >= 3: # yaml already there

if "public: " in content.split("---\n")[1]:

if not content.split("public: ")[1].startswith("yes"):

continue

elif not directory_public:

continue

output = f'---\ntitle: "{title_clean}"\n{cleanup_content(content[4:], custom_replaces)}'

else:

if not directory_public:

continue

output = f'---\ntitle: "{title_clean}"\n---\n{cleanup_content(content, custom_replaces)}'

with open(os.path.join(output_dir, file.replace("&", "and")), "w") as f:

f.write(output)

if __name__ == '__main__':

# an arbitrary third parameter applies my custom string replaces for my setup

if len(sys.argv) != 3 and len(sys.argv) != 4:

print("Invalid number of commandline parameters")

exit(1)

input_dir = os.path.join(os.getcwd(), os.path.normpath(sys.argv[1]))

output_dir = os.path.join(os.getcwd(), os.path.normpath(sys.argv[2]))

if not os.path.isdir(output_dir):

os.mkdir(output_dir)

else:

for f in os.listdir(output_dir):

os.remove(os.path.join(output_dir, f))

visibility_dict = dict()

process_directory(input_dir, output_dir, visibility_dict, len(sys.argv) == 4)